RETFound: How to use the retinal AI model RETFound

Foundation models are large, versatile pre-trained neural networks that serve as a starting point for a wide range of natural language processing and other machine learning tasks, often fine-tuned for specific applications. They are often trained in a semi-supervised manner (reducing the need for expensive data labelling). These models are particularly interesting as they can quickly adapt to new tasks as mentioned in this article published by Stanford University.

RETFound demo by Bitfount's customer success manager Calum Burnstone

RETFound

RETFound, recently developed by researchers at Moorfields Eye Hospital and University College London and published in Nature, is the world's first foundation model trained on retinal images. It represents a significant advance in healthcare AI as it demonstrates the enormous potential of tapping into the massive volume of routinely collected medical imaging data, which would otherwise go unused due to the manual effort required to label it. We’re excited to announce that RETFound can now be easily deployed through Bitfount, with pre-configured task templates to help you get started.

Before RETFound, creating AI systems to analyse retinal scans required training separate models from scratch for classifying each disease. This was incredibly time-, compute- and data-intensive. RETFound changes everything. Now, researchers can start with RETFound's pre-trained parameters and simply fine-tune the model for their specific task using far less labelled data and training time and on just a single GPU. This opens the door for a multitude of new, performant and training-efficient AI models to be developed for retinal diseases where no existing AI-based analysis tools exist.

In the Nature paper, the Moorfields/UCL researchers, led by Prof Pearse Keane, demonstrated RETFound's capabilities in identifying and quantifying diseases such as Diabetic Retinopathy and Age-related Macular Degeneration (AMD). The study also explored RETFound’s applications beyond Ophthalmology. Astonishingly, with minimal fine-tuning RETFound was shown to be able to detect numerous other conditions, including heart failure and stroke, and can even predict the onset of Parkinson's disease. All of this is achievable with just a fraction of the typical data requirements.

Statistically, RETFound performs better than the comparison models with just 10% of training data in 3-year incidence prediction of heart failure and myocardial infarction and is comparable to other models with 45-50% of data in diabetic retinopathy. Furthermore, RETFound achieves convergence significantly quicker than the comparison models even when trained on the same amount of data, saving 80% of training time in adapting to 3 years incidence prediction of myocardial infarction and 46% in diabetic retinopathy.

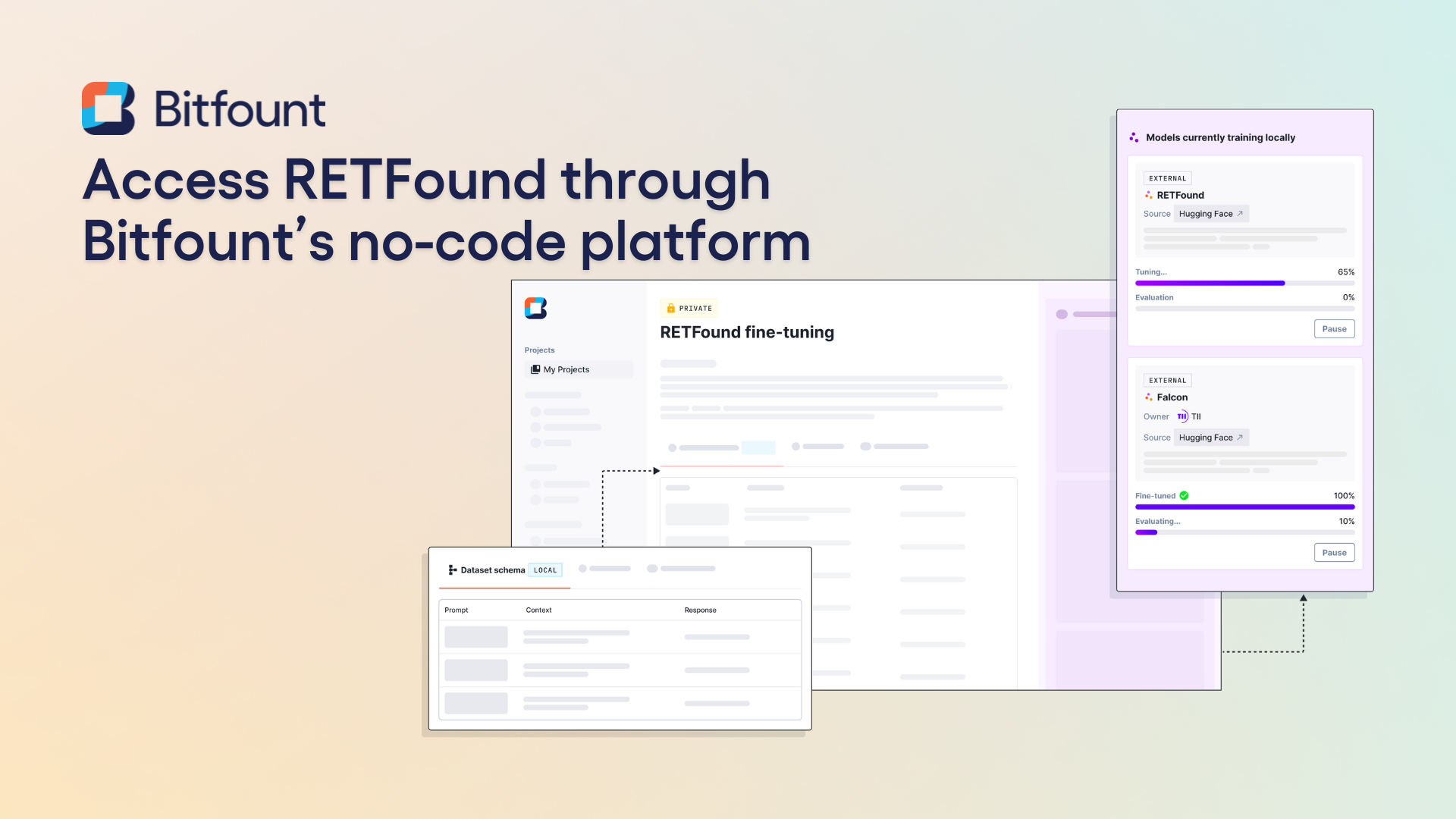

The easiest way to access and fine-tune RETFound

Even though RETFound is an open-source model, the easiest way to access RETFound is through the Bitfount platform. Users can leverage the model for both fine-tuning and inference without needing to write a line of code. Our desktop app and Python SDK allow you to rapidly build and deploy RETFound models tailored to your use case.

Don't have a dataset? No problem. Join a collaborator's project and remotely fine-tune on their private retinal scan data. Or try replicating the published results using open datasets like IDRID.

Once you fine-tune your RETFound model, you can immediately run inference on new retinal images to generate predictions. You can also share this model with collaborators by granting them access to it in the Bitfount platform, all within a secure, private ecosystem with data never leaving its home location.

RETFound and other foundation models represent an enormous leap forward in healthcare AI. Combine them with federated learning and the possibilities are endless - from Ophthalmology to cardiography to neurology. We can’t wait to see what you build!

Get started guide

Once you have signed up for a free Bitfount account from our sign up page and downloaded the Bitfount desktop app from our website, you're ready to get started. Our demo of RETFound in action covers everything needed to conduct secure research using RETFound, including:

- Connecting a training dataset - sample images you collate into different categories (classes) that you want your eventual trained model to identify.

- Creating and running a fine-tuning project - link your dataset to a project and run the task designed to adapt RETFound to your specific research application.

- Connecting a dataset to analyse - an unlabeled dataset which will be used to test your newly trained model.

- Creating an inference project - the training process generates updated model parameters for your specific use case, these are then fed into a new project that will enable automatic classification of your retinal images.

- Running the classification task - link your dataset to the project and see your adapted RETFound model in action, classifying your images into the classes you defined in step one.

FAQs

What is a Foundation Model?

An AI model that is trained on a broad and generalised dataset, in the case of RETFound, over a million retinal images. Foundation models can be adapted and applied to complete a range of specific downstream tasks.

What is model fine tuning?

It is the process of taking a general foundation model and training it for use in a specific application. For example, training RETFound to classify retinal diseases that you are interested in researching.

What are classes?

A category or group a model classifies data into. For example, you might use RETFound to determine if a fundus image falls within a ‘diabetic retinopathy’ or a ‘normal/other pathology’ class.

How many images will I need to fine-tune the RETFound model?

This will depend on several factors such as the desired level of trained model precision, the number of image classes, etc. Generally, we recommend that you start with a minimum of 100 images per class.

What kind of images can be used?

There are two 'base' versions of RETFound, one that was pre-trained using fundus images, and another that was pre-trained using OCT images. It is worth noting, however, that the RETFound model is a 2D model. This means that even in the case of OCT volumes, the RETFound model takes a single B-scan at a time as input. The model can of course be used to analyse all B-scans within a volume, but predictions will be generated for each B-scan separately.

.svg)

%20(12).png)

.png)

.png)